EDIT: SORRY, WAS BEING A MORON.

See below.

Edited, May 28th 2013 5:27am by Dizmo

·Theme

- Forums

- Final Fantasy XIV

- General Discussion

- ARR PC Performance (Gridania ONLY)

ARR PC Performance (Gridania ONLY)Follow

Dizmo wrote:

555m mobile GPU

Core i7 3610QM processor (quad core)

8GB RAM

1920*1080

Medium settings

Very laggy. This is a PS3 game, so should really be playable on high end hardware from 7 years ago, let alone an upper middle range gaming laptop from 2012. It's still too demanding for the level of graphics.

Edited, Apr 30th 2013 7:22pm by Dizmo

Core i7 3610QM processor (quad core)

8GB RAM

1920*1080

Medium settings

Very laggy. This is a PS3 game, so should really be playable on high end hardware from 7 years ago, let alone an upper middle range gaming laptop from 2012. It's still too demanding for the level of graphics.

Edited, Apr 30th 2013 7:22pm by Dizmo

Square-Enix God bless em, they just don't know how to code a PC games without it being morbidly obese, figuratively speaking.

Edited, Apr 30th 2013 8:09pm by electromagnet83

electromagnet83 wrote:

I have a gtx 470 and mid-weekend upgraded to a 660 TI. Both scored me between 4k- 5k and Gridania ran like trash on 1920X1080 on maximum settings. A bit disappointing considering I ran 1.0 on max with relatively no slow down. The only thing I would disable on that version was ambient occlusion because for the life of me I couldn't tell a difference in appearance, only in frame rate. Anyways. I'm guessing to run Gridania at max settings and in all it's beauty you'll need a score of at least 6-7k on the benchmark.

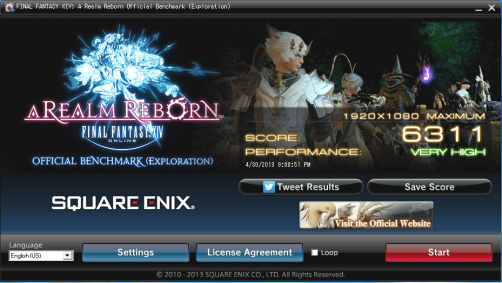

Just got my pc up and running, evil bent pin on the processor and here is the benchmark with the 660ti in it

Specs

AMD Phenom II Black Edition x4 965 3.4 GHz

12 GB Ram

Seagate 1 TB HD

Windows 8 - 64 bit

ASRock 970 EXTREME3 AM3+ AMD 970 SATA 6Gb/s USB 3.0 ATX AMD Motherboard

GeForce GTX 660 TI (OC Edition)

Can say I'm fairly impressed compared to what I was using. But then again the score was over 6k just with a low resolution (medium setting) with my GTS 250

Edit: added card in the list

Edited, Apr 30th 2013 9:36pm by SillyHawk

SillyHawk wrote:

electromagnet83 wrote:

I have a gtx 470 and mid-weekend upgraded to a 660 TI. Both scored me between 4k- 5k and Gridania ran like trash on 1920X1080 on maximum settings. A bit disappointing considering I ran 1.0 on max with relatively no slow down. The only thing I would disable on that version was ambient occlusion because for the life of me I couldn't tell a difference in appearance, only in frame rate. Anyways. I'm guessing to run Gridania at max settings and in all it's beauty you'll need a score of at least 6-7k on the benchmark.

Just got my pc up and running, evil bent pin on the processor and here is the benchmark with the 660ti in it

Specs

AMD Phenom II Black Edition x4 965 3.4 GHz

12 GB Ram

Seagate 1 TB HD

Windows 8 - 64 bit

ASRock 970 EXTREME3 AM3+ AMD 970 SATA 6Gb/s USB 3.0 ATX AMD Motherboard

Can say I'm fairly impressed compared to what I was using. But then again the score was over 6k just with a low resolution with my GTS 250

Looks like you're ready to rock and roll. VERY similar set up to mine as well. If you wanted mind I'd really love to know how that score translates to actual gameplay in phase 3 (if you're in it). Maybe that way I can make a more informed decision on what gfx card to purchase. Pm me or something during that time.

Edited, Apr 30th 2013 9:41pm by electromagnet83

I just did a few upgrades over the past couple weeks and ya, the game is optimized fairly well. Seems to use both components to get good results. I initially had a q9550 with gtx 460 and scored about 3700 on maximum. Then switched out the 460 for a 7950 HD and got a score of 5500. Finally, upgraded to a 3570k with new ram and got 6800. My 3570k is at stock speeds now so there's a little wiggle room to maybe hit that 7000 score mark, but might need a better cooler for that as this chip runs a bit hotter then my previous q9550.

Xoie wrote:

Threx wrote:

Edit: One of the CPU's cores was running over 90% while the other 3 cores were about 60%. So the game isn't optimized well enough for multiple cores.

If all your cores were running between 50 and 100% isn't that the definition of optimized? None of them were under or over-utilized.

Not in my book. GW2, for example, is much better optimized on 4 cores (at least on my Phenom 955). When one core is near 100%, at least two others are around 80%.

Threx wrote:

Xoie wrote:

Threx wrote:

Edit: One of the CPU's cores was running over 90% while the other 3 cores were about 60%. So the game isn't optimized well enough for multiple cores.

If all your cores were running between 50 and 100% isn't that the definition of optimized? None of them were under or over-utilized.

Not in my book. GW2, for example, is much better optimized on 4 cores (at least on my Phenom 955). When one core is near 100%, at least two others are around 80%.

Yeah, but look at GW2 and compare it to ARR and it's easy to see why it's probably better optimized.

Play Experience in Gridania

Setting: Max - generally good with similar comments to below but some frame rate issues when screen has lots of other characters on it.

Setting: High - Excellent, great detail, great textures (I'm not one of these people who zoom in as far as possible to look for flaws - I just play the game). Smooth animation, minimal frame rate issues (if any at all). Looks virtually as good as max anyway unless you start looking for impressive lighting effects which seem to be more prevalent at Max settings.

Now the system: Built this for release of Version 1.0 so was good then - less so now but played 1.0 very well indeed.

System:

Windows 7 Home Premium 64-bit (6.1, Build 7601) Service Pack 1 (7601.win7sp1_gdr.130104-1431)

Intel(R) Core(TM) i7 CPU 960 @ 3.20GHz

12279.113MB

NVIDIA GeForce GTX 480(VRAM 4048 MB) 9.18.0013.1090

Benchmark score at Max settings.

Score:4982

Average Framerate:41.511

Performance:High

-Easily capable of running the game. Should perform well, even at higher resolutions.

Screen Size: 1920x1080

Screen Mode: Full Screen

Graphics Presets: Maximum

Setting: Max - generally good with similar comments to below but some frame rate issues when screen has lots of other characters on it.

Setting: High - Excellent, great detail, great textures (I'm not one of these people who zoom in as far as possible to look for flaws - I just play the game). Smooth animation, minimal frame rate issues (if any at all). Looks virtually as good as max anyway unless you start looking for impressive lighting effects which seem to be more prevalent at Max settings.

Now the system: Built this for release of Version 1.0 so was good then - less so now but played 1.0 very well indeed.

System:

Windows 7 Home Premium 64-bit (6.1, Build 7601) Service Pack 1 (7601.win7sp1_gdr.130104-1431)

Intel(R) Core(TM) i7 CPU 960 @ 3.20GHz

12279.113MB

NVIDIA GeForce GTX 480(VRAM 4048 MB) 9.18.0013.1090

Benchmark score at Max settings.

Score:4982

Average Framerate:41.511

Performance:High

-Easily capable of running the game. Should perform well, even at higher resolutions.

Screen Size: 1920x1080

Screen Mode: Full Screen

Graphics Presets: Maximum

SillyHawk wrote:

electromagnet83 wrote:

I have a gtx 470 and mid-weekend upgraded to a 660 TI. Both scored me between 4k- 5k and Gridania ran like trash on 1920X1080 on maximum settings. A bit disappointing considering I ran 1.0 on max with relatively no slow down. The only thing I would disable on that version was ambient occlusion because for the life of me I couldn't tell a difference in appearance, only in frame rate. Anyways. I'm guessing to run Gridania at max settings and in all it's beauty you'll need a score of at least 6-7k on the benchmark.

Just got my pc up and running, evil bent pin on the processor and here is the benchmark with the 660ti in it

Specs

AMD Phenom II Black Edition x4 965 3.4 GHz

12 GB Ram

Seagate 1 TB HD

Windows 8 - 64 bit

ASRock 970 EXTREME3 AM3+ AMD 970 SATA 6Gb/s USB 3.0 ATX AMD Motherboard

GeForce GTX 660 TI (OC Edition)

Can say I'm fairly impressed compared to what I was using. But then again the score was over 6k just with a low resolution (medium setting) with my GTS 250

Edit: added card in the list

Edited, Apr 30th 2013 9:36pm by SillyHawk

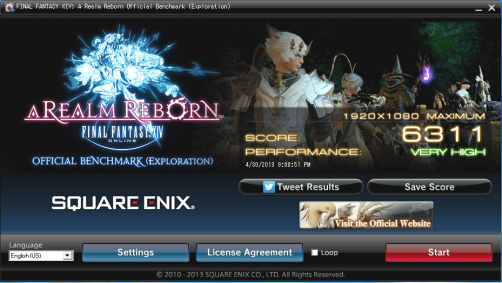

I have a similar set up and I can't break the high 5800s. I can hit 6300 if I turn off distance draw and lower ambient occlusion to low.

AMD FX-6300 4.2 GHz OC

16 GB RAM

120 GB OCZ SSD

Asrock 990FX Extreme9

Sapphire 7870 OC GHz OC

I think this whole thread illustrates that, in my opinion and experience, 2.0 doesn't require any less of a system than 1.0 did.

electromagnet83 wrote:

I think this whole thread illustrates that, in my opinion and experience, 2.0 doesn't require any less of a system than 1.0 did.

It depends what you're talking about...

If you're just talking about running the game AT ALL, then yeah.. 2.0 requires less of a system than 1.0 did. That's intentional.

If you're talking about running the game well, then you're probably right.

I'm running 2.0 on the system I built to run 1.0 (except for my graphics card that died on me). The 2.0 beta runs MUUUUUCH better than the 1.0 beta did.

in gridania of course... don't hurt me

Edited, May 8th 2013 12:14pm by Callinon

svlyons wrote:

If random outcomes aren't acceptable to you, then don't play with random people.

electromagnet83 wrote:

I think this whole thread illustrates that, in my opinion and experience, 2.0 doesn't require any less of a system than 1.0 did.

If anything it gives us something to talk about while waiting to see how the game actually runs on our rigs

1.0 ran like **** on my system. I played it anyway, at low HD resolution instead of full screen, because it was enjoyable near the end. But it was so poorly optimized that even my very good system struggled to run it well.

2.0 beta runs like silk. It'd run even better if it wasn't for my 2.5 year old crappy processor. The graphics are crisp and clear, and the graphical lag is non existent.

2.0 beta runs like silk. It'd run even better if it wasn't for my 2.5 year old crappy processor. The graphics are crisp and clear, and the graphical lag is non existent.

Catwho wrote:

1.0 ran like **** on my system. I played it anyway, at low HD resolution instead of full screen, because it was enjoyable near the end. But it was so poorly optimized that even my very good system struggled to run it well.

2.0 beta runs like silk. It'd run even better if it wasn't for my 2.5 year old crappy processor. The graphics are crisp and clear, and the graphical lag is non existent.

2.0 beta runs like silk. It'd run even better if it wasn't for my 2.5 year old crappy processor. The graphics are crisp and clear, and the graphical lag is non existent.

I'm so jelly and the complete opposite. Except a few slowdowns here and there 1.0 ran at 1080p amazingly on my rig. 2.0 not so bueno

It's hard to compare, the detail in 2.0 to me is greatly improved, but the textures from 1.0 seem to be shinier. This is just my opinion though, these visual opinions will vary from person to person. The advantage of 2.0 is that even on crap settings, the game looks good, where as 1.0 on crap settings, it looked like crap. There's no doubt though that 2.0 is more optimized since everything was 10x more fluid from 1.0 to 2.0 on my q9550.

Originally played 1.0 on a

Asus g51j-x2 it ran intel I7, 8 GB,windows 7 home premium 64 bit, with a GTS360m, the only problem I ever had was that whith 1.0 if I played in Grandia ( sorry about the spelling) in about 10-15 min 1.0 would crash. So I could play on med settings in 2 cities but low in Grandia?

In ARR I played the same system for hours without a problem recently. medium graphics

I just upgraded to a g75vw I7, 12gb, windows 8 64 bit, gtx 660m. And runs smooth on all settings so far so I put my old system on eBay the other day.

Asus g51j-x2 it ran intel I7, 8 GB,windows 7 home premium 64 bit, with a GTS360m, the only problem I ever had was that whith 1.0 if I played in Grandia ( sorry about the spelling) in about 10-15 min 1.0 would crash. So I could play on med settings in 2 cities but low in Grandia?

In ARR I played the same system for hours without a problem recently. medium graphics

I just upgraded to a g75vw I7, 12gb, windows 8 64 bit, gtx 660m. And runs smooth on all settings so far so I put my old system on eBay the other day.

Dizmo wrote:

650m mobile GPU

Core i7 3610QM processor (quad core)

8GB RAM

1920*1080

Medium settings

Very laggy. This is a PS3 game, so should really be playable on high end hardware from 7 years ago, let alone an upper middle range gaming laptop from 2012. It's still too demanding for the level of graphics.

Edited, Apr 30th 2013 7:22pm by Dizmo

Core i7 3610QM processor (quad core)

8GB RAM

1920*1080

Medium settings

Very laggy. This is a PS3 game, so should really be playable on high end hardware from 7 years ago, let alone an upper middle range gaming laptop from 2012. It's still too demanding for the level of graphics.

Edited, Apr 30th 2013 7:22pm by Dizmo

OKAY, I must apologise here. My laptop was defaulting to the onboard graphics without me realizing. -_-

I ran the benchmark again out of curiosity and tried changing the global preferred GPU to the 650m instead of the integrated chip, and my benchmark went up several times (from 1300~ to 3890 on 1920x1080 on standard settings).

I'm an idiot.

Edited, May 28th 2013 4:15am by Dizmo

Edited, Jun 12th 2013 8:12pm by Dizmo

Edited, Jun 12th 2013 8:12pm by Dizmo

No you aren't, it's a very easy mistake to make, especially if you don't have it set to ask you which graphics card to use when you open a program for the first time and it just defaults to the integrated card. I've made that mistake myself. It seems like common sense to me to run everything on the better GPU, but I suppose sometimes if you are trying to save on battery power running the lower card might make more sense for simple stuff like web browsing.

I guess it was not completely my fault. The graphics chip selection was set to auto in the Nvidia menu and despite my computer always being plugged in, it was using the integrated chip for everything. >_>

Recent Visitors: 195

All times are in CST

Anonymous Guests (195)

- Forums

- Final Fantasy XIV

- General Discussion

- ARR PC Performance (Gridania ONLY)

© 2024 Fanbyte LLC